25. JDBC Connection¶

In this chapter, you will learn how to use JDBC source to write and read data in PySpark. The idea database for spark is HDFS. But not many companies are not willing to move all the data (PII data) into Databricks’ HDFS. Then you have to use JDBC to connect to external database. While JDBC read and write are always tricky and confusion for beginner.

25.1. JDBC Driver¶

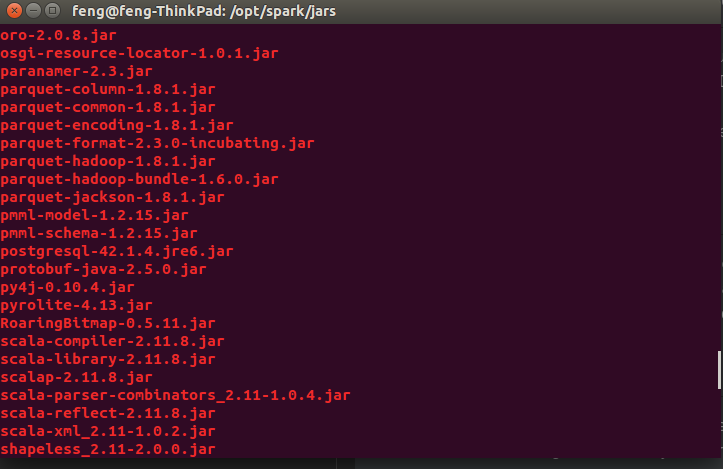

For successfully connection, you need the corresponding JDBC driver for the specify Database. Here I will use Greenplum

database as an example to demonstrate how to get the correct .jar file and where to put the .jar.

25.1.1. Get the .jar file¶

Since Greenplum is using PostgreSQL, you can search with ‘PostgreSQL JDBC Driver’. There is a high chance that

you will reach to this page: https://jdbc.postgresql.org/download.html. Then download the .jar file.

25.2. JDBC read¶

See code JDBC lower-upper Bound

stride = (upper_bound/partitions_number) - (lower_bound/partitions_number)

partition_nr = 0

while (partition_nr < partitions_number)

generate WHERE clause:

partition_column IS NULL OR partition_column < stride

if:

partition_nr == 0 AND partition_nr < partitions_number

or generate WHERE clause:

partition_column >= stride AND partition_column < next_stride

if:

partition_nr < 0 AND partition_nr < partitions_number

or generate WHERE clause

partition_column >= stride

if:

partition_nr > 0 AND partition_nr == partitions_number

where next_stride is calculated after computing the left sideo

of the WHERE clause by next_stride += stride

(stride = (20/5) - (0/5) = 4

SELECT * FROM my_table WHERE partition_column IS NULL OR partition_column < 4

SELECT * FROM my_table WHERE partition_column >= 4 AND partition_column < 8

SELECT * FROM my_table WHERE partition_column >= 8 AND partition_column < 12

SELECT * FROM my_table WHERE partition_column >= 12 AND partition_column < 16

SELECT * FROM my_table WHERE partition_column >= 16

As you see, the above queries generate 5 partitions of data, each containing the values from: (0-3), (4-7), (8-11), (12-15) and (16 and more).

25.3. JDBC write¶

TODO…

25.4. JDBC temp_view¶

TODO…